Research Articles in Indexed Journals

Download PdfAKFruitYield: Modular benchmarking and video analysis software for Azure Kinect cameras for fruit size and fruit yield estimation in apple orchards

Miranda, J.C., Arnó, J., Gené-Mola, J., Fountas, S., Gregorio, E. 2023. AKFruitYield: Modular benchmarking and video analysis software for Azure Kinect cameras for fruit size and fruit yield estimation in apple orchards. SoftwareX, 24, 101548, https://doi.org/10.1016/j.softx.2023.101548

AKFruitYield is a modular software that allows orchard data from RGB-D Azure Kinect cameras to be processed for fruit size and fruit yield estimation. Specifically, two modules have been developed: i) AK_SW_BENCHMARKER that makes it possible to apply different sizing algorithms and allometric yield prediction models to manually labelled color and depth tree images; and ii) AK_VIDEO_ANALYSER that analyses videos on which to automatically detect apples, estimate their size and predict yield at the plot or per hectare scale using the appropriate algorithms. Both modules have easy-to-use graphical interfaces and provide reports that can subsequently be used by other analysis tools.

AKFruitYield is a modular software that allows orchard data from RGB-D Azure Kinect cameras to be processed for fruit size and fruit yield estimation. Specifically, two modules have been developed: i) AK_SW_BENCHMARKER that makes it possible to apply different sizing algorithms and allometric yield prediction models to manually labelled color and depth tree images; and ii) AK_VIDEO_ANALYSER that analyses videos on which to automatically detect apples, estimate their size and predict yield at the plot or per hectare scale using the appropriate algorithms. Both modules have easy-to-use graphical interfaces and provide reports that can subsequently be used by other analysis tools.

This work was partly funded by the Department of Research and Universities of the Generalitat de Catalunya (grants 2017 SGR 646) and by the Spanish Ministry of Science and Innovation/AEI/10.13039/501100011033/ERDF (grant RTI2018–094222-B-I00 [PAgFRUIT project] and PID2021–126648OB-I00 [PAgPROTECT project]). The Secretariat of Universities and Research of the Department of Business and Knowledge of the Generalitat de Catalunya and European Social Fund (ESF) are also thanked for financing Juan Carlos Miranda's pre-doctoral fellowship (2020 FI_B 00586). The work of Jordi Gené-Mola was supported by the Spanish Ministry of Universities through a Margarita Salas postdoctoral grant funded by the European Union - NextGenerationEU. The authors would also like to thank the Institut de Recerca i Tecnologia Agroalimentàries (IRTA) for allowing the use of their experimental fields, and in particular Dr. Luís Asín and Dr. Jaume Lordán who have contributed to the success of this work.

Fruit sizing using AI: A review of methods and challenges

Authors: Miranda, J.C., Gené-Mola, J., Zude-Sasse, M., Tsoulias, N., Escolà, A., Arnó, J., Rosell-Polo, J.-r., Sanz-Cortiella, R., Martínez-Casasnovas, J.A., Gregorio, E. 2023. Fruit sizing using AI: A review of methods and challenges. Postharvest Biology and Technology, 206, 112587. https://doi.org/10.1016/j.postharvbio.2023.112587

Fruit size at harvest is an economically important variable for high-quality table fruit production in orchards and vineyards. In addition, knowing the number and size of the fruit on the tree is essential in the framework of precise production, harvest, and postharvest management. A prerequisite for analysis of fruit in a real-world environment is the detection and segmentation from background signal. In the last five years, deep learning convolutional neural network have become the standard method for automatic fruit detection, achieving F1-scores higher than 90 %, as well as real-time processing speeds. At the same time, different methods have been developed for, mainly, fruit size and, more rarely, fruit maturity estimation from 2D images and 3D point clouds. These sizing methods are focused on a few species like grape, apple, citrus, and mango, resulting in mean absolute error values of less than 4 mm in apple fruit. This review provides an overview of the most recent methodologies developed for in-field fruit detection/counting and sizing as well as few upcoming examples of maturity estimation. Challenges, such as sensor fusion, highly varying lighting conditions, occlusions in the canopy, shortage of public fruit datasets, and opportunities for research transfer, are discussed.

This work was partly funded by the Department of Research and Universities of the Generalitat de Catalunya (grants 2017 SGR 646 and 2021 LLAV 00088) and by the Spanish Ministry of Science and Innovation MCIN/AEI/10.13039/501100011033 / FEDER (grants RTI2018-094222-B-I00 [PAgFRUIT project] and PID2021-126648OB-I00 [PAgPROTECT project]). The Secretariat of Universities and Research of the Department of Business and Knowledge of the Generalitat de Catalunya and European Social Fund (ESF) are also thanked for financing Juan Carlos Miranda’s pre-doctoral fellowship (2020 FI_B 00586). The work of Jordi Gené-Mola was supported by the Spanish Ministry of Universities through a Margarita Salas postdoctoral grant funded by the European Union - NextGenerationEU.

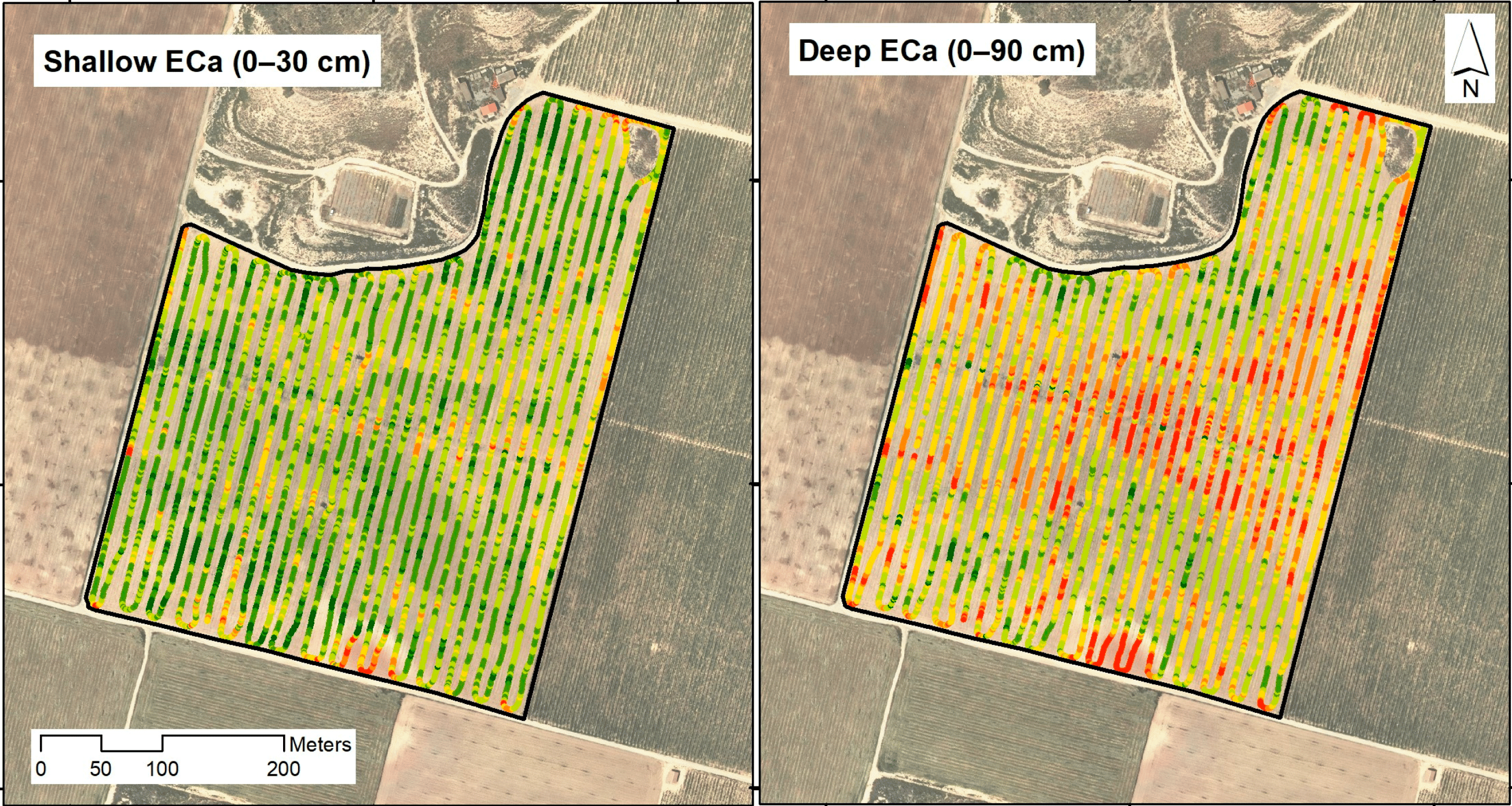

Drip Irrigation Soil-Adapted Sector Design and Optimal Location of Moisture Sensors: A Case Study in a Vineyard Plot

Authors: Arnó, J., Uribeetxebarria, A., Llorens, J., Escolà, A., Rosell-Polo, J.R., Gregorio, E., Martínez-Casasnovas, J.A. 2023. Drip Irrigation Soil-Adapted Sector Design and Optimal Location of Moisture Sensors: A Case Study in a Vineyard Plot. Agronomy, 13(9), 2369. https://doi.org/10.3390/agronomy13092369

To optimise sector design in drip irrigation systems, a two-stage procedure is presented and applied in a commercial vineyard plot. Soil apparent electrical conductivity (ECa) mapping and soil purposive sampling are the two stages on which the proposal is based. Briefly, ECa data to wet bulb depth provided by the VERIS 3100 soil sensor were mapped before planting using block ordinary kriging. Looking for simplicity and practicality, only two ECa classes were delineated from the ECa map (k-means algorithm) to delimit two potential soil classes within the plot with possible different properties in terms of potential soil water content and/or soil water regime. Contrasting the difference between ECa classes (through discriminant analysis of soil properties at different systematic sampling locations), irrigation sectors were then designed in size and shape to match the previous soil zoning. Taking advantage of the points used for soil sampling, two of these locations were finally selected as candidates to install moisture sensors according to the purposive soil sampling theory. As these two spatial points are expectedly the most representative of each soil class, moisture information in these areas can be taken as a basis for better decision-making for vineyard irrigation management.

This research was funded by ACCIÓ Generalitat de Catalunya, project COMRDI16-1-0031-06 (LISA: Low Input Sustainable Agriculture), within the COTPA RIS3CAT Community. Part of the funding came from the European Regional Development Fund (ERDF). In addition, part of the research is a result of the RTI2018-094222-B-I00 project (PAgFRUIT) funded by MCIN/AEI/10.13039/501100011033 and also by the ERDF.

Organic mulches as an alternative for under-vine weed management in Mediterranean irrigated vineyards: Impact on agronomic performance

Authors: Cabrera-Pérez, C., Llorens, J., Escolà, A., Royo-Esnal, A., Recasens, J. 2023. Organic mulches as an alternative for under-vine weed management in Mediterranean irrigated vineyards: Impact on agronomic performance. European Journal of Agronomy, 145, 126798. https://doi.org/10.1016/j.eja.2023.126798

One of the main challenges for organic vineyards is weed management. Weeds tend to compete for water and nutrients, and can cause large yield reductions. Traditional under-vine weed management in organic vineyards consists on mechanical cultivation along the season, which is associated to soil and young vine root damages, and to high fuel consumption. Thus, sustainable alternatives need to be found. Cover crops are becoming common in the last decades due to their multiple benefits in agroecosystems. Nevertheless, under-vine cover crop implementation in Mediterranean vineyards is limited as this competes for resources (water and nutrients), reducing the yield, vegetative development, and grape size of vines. The use of organic mulches could overcome all these problems, while benefitting vine performance. In the present work, the response of vines, soil and weeds to mulching was evaluated. An experiment was carried out in Raimat, Lleida (Catalonia, NE Spain) in a commercial vineyard from 2019 to 2021, and the following treatments applied: 1) mechanical cultivation with an in-row tiller; 2) mowing a permanent spontaneous cover with an in-row mower; 3) almond shell mulch; and 4) chopped pine wood mulch. Results showed lower weed cover along the three seasons in mulched treatments, as well as higher yield, better vine water status, and greater vegetative development from traditional measurement. The latter was confirmed by and analised with further detail with measurements acquired with a mobile terrestrial laser scanner (MTLS) based on light detection and ranging (LiDAR) sensors. Besides, petiole nutrient status was better in vines without a spontaneous living cover. Organic mulches improved vine performance and weed control, so these results allow to optimize water use efficiency in the Mediterranean basin with scarce water resources. Mulching can be considered as a useful strategy that enhances a more sustainable viticulture.

Simultaneous fruit detection and size estimation using multitask deep neural networks

Authors: Ferrer-Ferrer, M., Ruiz-Hidalgo, J., Gregorio, E., Vilaplana, V., Morros, J.R., Gené-Mola, J. 2023. Simultaneous fruit detection and size estimation using multitask deep neural networks. Biosystems Engineering, 233, 63-75, https://doi.org/10.1016/j.biosystemseng.2023.07.010

The measurement of fruit size is of great interest to estimate the yield and predict the harvest resources in advance. This work proposes a novel technique for in-field apple detection and measurement based on Deep Neural Networks. The proposed framework was trained with RGB-D data and consists of an end-to-end multitask Deep Neural Network architecture specifically designed to perform the following tasks: 1) detection and segmentation of each fruit from its surroundings; 2) estimation of the diameter of each detected fruit. The methodology was tested with a total of 15,335 annotated apples at different growth stages, with diameters varying from 27 mm to 95 mm. Fruit detection results reported an F1-score for apple detection of 0.88 and a mean absolute error of diameter estimation of 5.64 mm. These are state-of-the-art results with the additional advantages of: a) using an end-to-end multitask trainable network; b) an efficient and fast inference speed; and c) being based on RGB-D data which can be acquired with affordable depth cameras. On the contrary, the main disadvantage is the need of annotating a large amount of data with fruit masks and diameter ground truth to train the model. Finally, a fruit visibility analysis showed an improvement in the prediction when limiting the measurement to apples above 65% of visibility (mean absolute error of 5.09 mm). This suggests that future works should develop a method for automatically identifying the most visible apples and discard the prediction of highly occluded fruits.

This work was partly funded by the Departament de Recerca i Universitats de la Generalitat de Catalunya (grant 2021 LLAV 00088), the Spanish Ministry of Science, Innovation and Universities (grants RTI2018-094222-B-I00[PAgFRUIT project], PID2021-126648OB-I00 [PAgPROTECT project] and PID2020-117142 GB-I00 [DeeLight project] by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union).

Relationship between yield and tree growth in almond as influenced by nitrogen nutrition

Authors: Sandonís-Pozo, L., Martínez-Casasnovas, J.A., Llorens, J., Escolà, A., Arnó, J., Pascual, M. 2023.Relationship between yield and tree growth in almond as influenced by nitrogen nutrition. Scientia Horticulturae, 321, 112353. https://doi.org/10.1016/j.scienta.2023.112353

Understanding the relationship between nitrogen (N), tree growth and yield, can maximize productivity and sustainability. This study analyzed the effect of N on canopy development and its relation with yield in a super-intensive almond orchard in Spain over two seasons. The treatments included 50 kg N ha−1, 100 kg N ha−1, 150 kg N ha−1, and 100 kg N ha−1 applied between 3.1 and 7.7 Growth Stages, and their combinations with a nitrification inhibitor, DMPSA. The canopy was measured using LiDAR technology after pruning in spring and before harvest. Differences were found in canopy parameters comparing early N (Nstop) against N applied along the season. The treatments N50, N100 and N150 resulted in higher cross sections and widths, less porosity and higher yield, fruit set and hull weights. In contrast, Nstop gave higher porosity and higher flower density. DMPSA produced more homogeneous canopies and improved N use efficiency, combined with N100 or N150. These findings provide evidence to support the management of N in super-intensive orchards.

Grant RTI2018–094,222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501,100,011,033 and by the EU's “ERDF, a way of making Europe”.

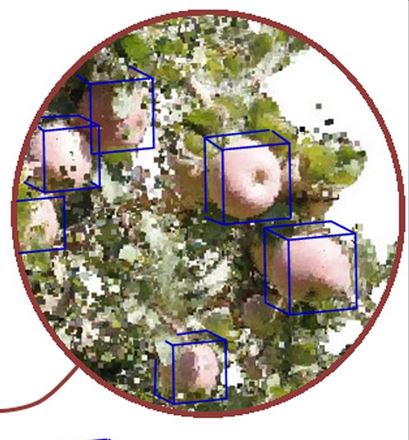

Looking behind occlusions: A study on amodal segmentation for robust on-tree apple fruit size estimation

Authors: Gené-Mola, J., Ferrer-Ferrer, M., Gregorio, E., Blok, P.M., Hemming, J., Morros, J.R., Rosell-Polo, J.R., Vilaplana, V., Ruiz-Hidalgo, J. 2023. Looking behind occlusions: A study on amodal segmentation for robust on-tree apple fruit size estimation. Computers and Electronics in Agriculture, 209, 107854. https://doi.org/10.1016/j.compag.2023.107854

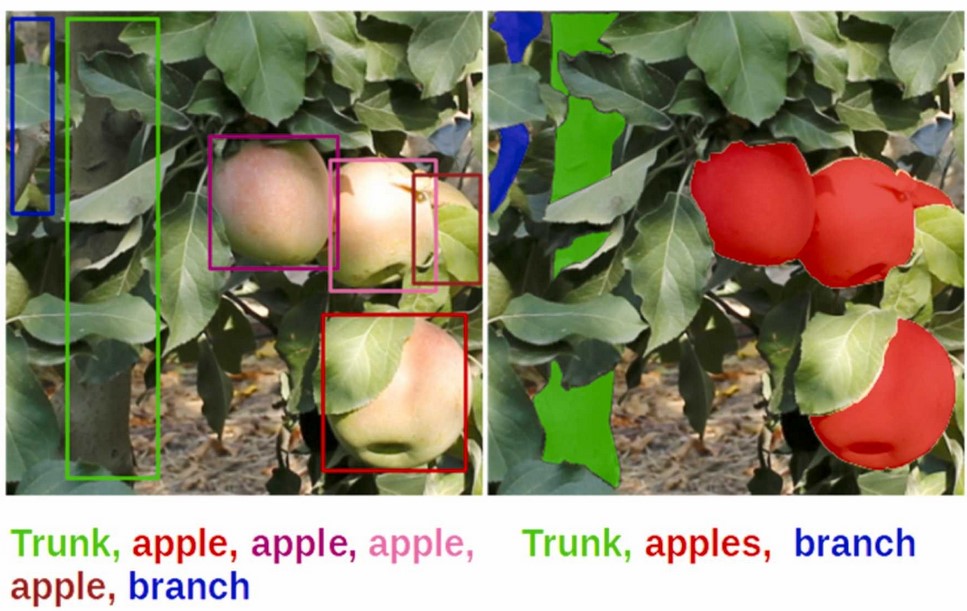

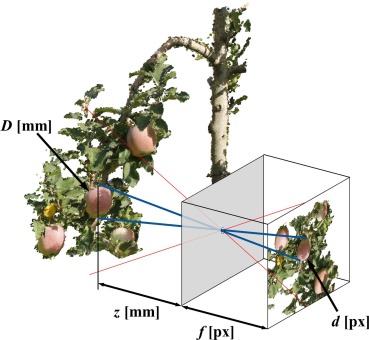

The detection and sizing of fruits with computer vision methods is of interest because it provides relevant information to improve the management of orchard farming. However, the presence of partially occluded fruits limits the performance of existing methods, making reliable fruit sizing a challenging task. While previous fruit segmentation works limit segmentation to the visible region of fruits (known as modal segmentation), in this work we propose an amodal segmentation algorithm to predict the complete shape, which includes its visible and occluded regions. To do so, an end-to-end convolutional neural network (CNN) for simultaneous modal and amodal instance segmentation was implemented. The predicted amodal masks were used to estimate the fruit diameters in pixels. Modal masks were used to identify the visible region and measure the distance between the apples and the camera using the depth image. Finally, the fruit diameters in millimetres (mm) were computed by applying the pinhole camera model. The method was developed with a Fuji apple dataset consisting of 3925 RGB-D images acquired at different growth stages with a total of 15,335 annotated apples, and was subsequently tested in a case study to measure the diameter of Elstar apples at different growth stages. Fruit detection results showed an F1-score of 0.86 and the fruit diameter results reported a mean absolute error (MAE) of 4.5 mm and R2 = 0.80 irrespective of fruit visibility. Besides the diameter estimation, modal and amodal masks were used to automatically determine the percentage of visibility of measured apples. This feature was used as a confidence value, improving the diameter estimation to MAE = 2.93 mm and R2 = 0.91 when limiting the size estimation to fruits detected with a visibility higher than 60%. The main advantages of the present methodology are its robustness for measuring partially occluded fruits and the capability to determine the visibility percentage. The main limitation is that depth images were generated by means of photogrammetry methods, which limits the efficiency of data acquisition. To overcome this limitation, future works should consider the use of commercial RGB-D sensors.

This work was partly funded by the Departament de Recerca i Universitats de la Generalitat de Catalunya (grant 2021 LLAV 00088), the Spanish Ministry of Science, Innovation and Universities (grants RTI2018-094222-B-I00 [PAgFRUIT project], PID2021-126648OB-I00 [PAgPROTECT project] and PID2020-117142GB-I00 [DeeLight project] by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union). The work of Jordi Gené Mola was supported by the Spanish Ministry of Universities through a Margarita Salas postdoctoral grant funded by the European Union - NextGenerationEU. We would also like to thank Nufri (especially Santiago Salamero and Oriol Morreres) for their support during data acquisition, and Pieter van Dalfsen and Dirk de Hoog from Wageningen University & Research for additional data collection used in the case study.

AKFruitData: A dual software application for Azure Kinect cameras to acquire and extract informative data in yield tests performed in fruit orchard environments

Authors: Miranda, J.C., Gené-Mola, J., Arnó, J., Gregorio, E., 2022. AKFruitData: A dual software application for Azure Kinect cameras to acquire and extract informative data in yield tests performed in fruit orchard environments. SoftwareX, 20, 101231. https://doi.org/10.1016/j.softx.2022.101231

The emergence of low-cost 3D sensors, and particularly RGB-D cameras, together with recent advances in artificial intelligence, is currently driving the development of in-field methods for fruit detection, size measurement and yield estimation. However, as the performance of these methods depends on the availability of quality fruit datasets, the development of ad-hoc software to use RGB-D cameras in agricultural environments is essential. The AKFruitData software introduced in this work aims to facilitate use of the Azure Kinect RGB-D camera for testing in field trials. This software presents a dual structure that addresses both the data acquisition and the data creation stages. The acquisition software (AK_ACQS) allows different sensors to be activated simultaneously in addition to the Azure Kinect. Then, the extraction software (AK_FRAEX) allows videos generated with the Azure Kinect camera to be processed to create the datasets, making available colour, depth, IR and point cloud metadata. AKFruitData has been used by the authors to acquire and extract data from apple fruit trees for subsequent fruit yield estimation. Moreover, this software can also be applied to many other areas in the framework of precision agriculture, thus making it a very useful tool for all researchers working in fruit growing.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

Satellite multispectral indices to estimate canopy parameters and within‑field management zones in super‑intensive almond orchards

Authors: Sandonís-Pozo, L., Llorens, J., Escolà, A., Arnó, J., Pascual, M., Martínez-Casasnovas, J.A., 2022. Satellite multispectral indices to estimate canopy parameters and within‑field management zones in super‑intensive almond orchards. Precision Agriculture, 2022, https://doi.org/10.1007/s11119-022-09956-6

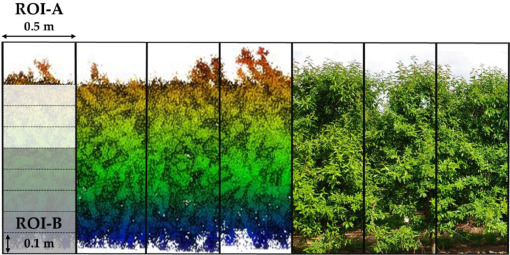

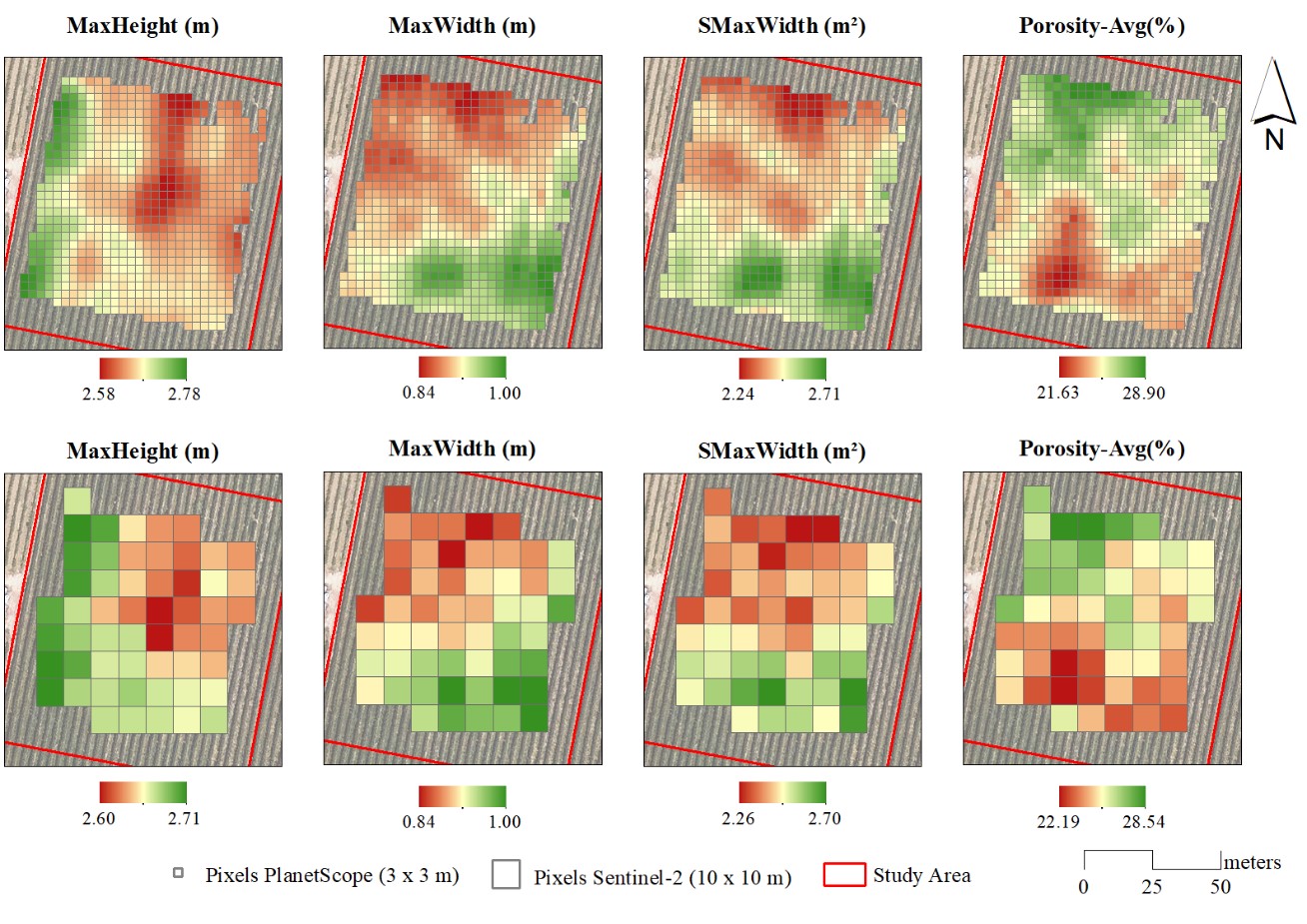

Continuous canopy status monitoring is an essential factor to support and precisely apply orchard management actions such as pruning, pesticide and foliar treatment applications, or fertirrigation, among others. For that, this work proposes the use of multispectral vegetation indices to estimate geometric and structural orchard parameters from remote sensing images (high temporal and spatial resolution) as an alternative to more time-consuming processing techniques, such as LiDAR surveys or UAV photogrammetry. A super-intensive almond (Prunus dulcis) orchard was scanned using a mobile terrestrial laser (LiDAR) in two different vegetative stages (after spring pruning and before harvesting). From the LiDAR point cloud, canopy orchard parameters, including maximum height and width, cross-sectional area and porosity, were summarized every 0.5 m along the rows and interpolated using block kriging to the pixel centroids of PlanetScope (3 × 3 m) and Sentinel-2 (10 × 10 m) image grids. To study the association between the LiDAR-derived parameters and 4 different vegetation indices. A canonical correlation analysis was carried out, showing the normalized difference vegetation index (NDVI) and the green normalized difference vegetation index (GNDVI) to have the best correlations. A cluster analysis was also performed. Results can be considered optimistic both for PlanetScope and Sentinel-2 images to delimit within-field management zones, being supported by significant differences in LiDAR-derived canopy parameters.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

Delineation of management zones in super-intensive almond orchards based on vegetation indices from UAV images validated by LiDAR-derived canopy parameters

Authors: Martínez-Casasnovas, J.A., Sandonís-Pozo, L., Escolà, A., Arnó, J., Llorens, J., 2022. Delineation of management zones in super-intensive almond orchards based on vegetation indices from UAV images validated by LiDAR-derived canopy parameters. Agronomy 12(1), 102. https://doi.org/10.3390/agronomy12010102

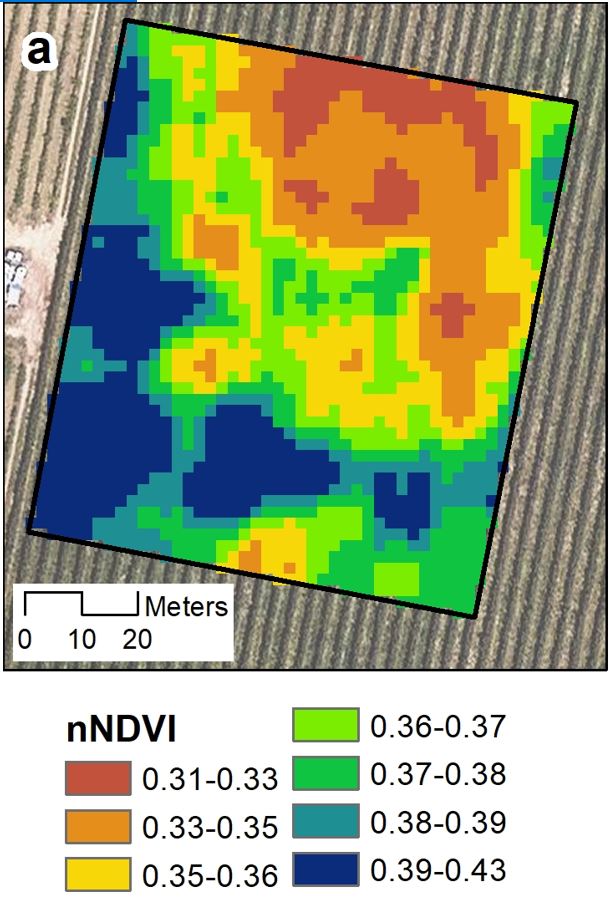

One of the challenges in orchard management, in particular of hedgerow tree plantations, is the delineation of management zones on the bases of high-precision data. Along this line, the present study analyses the applicability of vegetation indices derived from UAV images to estimate the key structural and geometric canopy parameters of an almond orchard. In addition, the classes created on the basis of the vegetation indices were assessed to delineate potential management zones. The structural and geometric orchard parameters (width, height, cross-sectional area and porosity) were characterized by means of a LiDAR sensor, and the vegetation indices were derived from a UAV-acquired multispectral image. Both datasets summarized every 0.5 m along the almond tree rows and were used to interpolate continuous representations of the variables by means of geostatistical analysis. Linear and canonical correlation analyses were carried out to select the best performing vegetation index to estimate the structural and geometric orchard parameters in each cross-section of the tree rows. The results showed that NDVI averaged in each cross-section and normalized by its projected area achieved the highest correlations and served to define potential management zones. These findings expand the possibilities of using multispectral images in orchard management, particularly in hedgerow plantations.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

3D characterization of a Boston Ivy double-skin green building facade using a LiDAR system

Authors: Pérez, G., Escolà, A, Rosell-Polo, J.R., Coma, J., Arasanz, R., Marrero, B., Cabeza, L.F., Gregorio, E. 2021. 3D characterization of a Boston Ivy double-skin green building facade using a LiDAR system. Building and Environment 206 (2021), 108320. DOI: https://doi.org/10.1016/j.buildenv.2021.108320

On the way to more sustainable and resilient urban environments, the incorporation of urban green infrastructure (UGI) systems, such as green roofs and vertical greening systems, must be encouraged. Unfortunately, given their variable nature, these nature-based systems are difficult to geometrically characterize, and therefore there is a lack of 3D objects that adequately reflect their geometry and analytical properties to be used in design processes based on Building Information Modelling (BIM) technologies. This fact can be a disadvantage, during the building's design phase, of UGIs over traditional grey solutions. Areas of knowledge such as precision agriculture, have developed technologies and methodologies that characterize the geometry of vegetation using point cloud capture. The main aim of this research was to create the 3D characterization of an experimental double-skin green facade, using LiDAR technologies.

From the results it could be confirmed that the methodology used was precise and robust, enabling the 3D reconstruction of the green facade's outer envelope. Detailed results were that foliage volume differences in height were linked to plant growth, whereas differences in the horizontal distribution of greenery were related to the influence of the local microclimate and specific plant diseases on the south orientation. From this research, along with complementary previous research, it could be concluded that, generally speaking, with vegetation volumes of 0.2 m3/m2, using Boston Ivy (Parthenocissus Tricuspidata) under Mediterranean climate, reductions in external building surface temperatures of around 13 °C can be obtained and used as analytic parameter in a future 3D-BIM-object.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

Comparison of 3D scan matching techniques for autonomous robot navigation in urban and agricultural environments

Authors: Guevara, D.J., Gené-Mola, J., Greogrio, E., Torres-Torriti, M., Reina, G., Auat Cheein, F. 2021. Comparison of 3D scan matching techniques for autonomous robot navigation in urban and agricultural environments. Journal of Applied Remote Sensing 16(2), 024508. https://doi.org/10.1117/1.JRS.15.024508 http://hdl.handle.net/10459.1/71527

Global navigation satellite system (GNSS) is the standard solution for solving the localization problem in outdoor environments, but its signal might be lost when driving in dense urban areas or in the presence of heavy vegetation or overhanging canopies. Hence, there is a need for alternative or complementary localization methods for autonomous driving. In recent years, exteroceptive sensors have gained much attention due to significant improvements in accuracy and cost-effectiveness, especially for 3D range sensors. By registering two successive 3D scans, known as scan matching, it is possible to estimate the pose of a vehicle. This work aims to provide in-depth analysis and comparison of the state-of-the-art 3D scan matching approaches as a solution to the localization problem of autonomous vehicles. Eight techniques (deterministic and probabilistic) are investigated: iterative closest point (with three different embodiments), normal distribution transform, coherent point drift, Gaussian mixture model, support vector-parametrized Gaussian mixture and the particle filter implementation. They are demonstrated in long path trials in both urban and agricultural environments and compared in terms of accuracy and consistency. On the one hand, most of the techniques can be successfully used in urban scenarios with the probabilistic approaches that show the best accuracy. On the other hand, agricultural settings have proved to be more challenging with significant errors even in short distance trials due to the presence of featureless natural objects. The results and discussion of this work will provide a guide for selecting the most suitable method and will encourage building of improvements on the identified limitations.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

3D Spectral Graph Wavelet Point Signatures in Pre-Processing Stage for Mobile Laser Scanning Point Cloud Registration in Unstructured Orchard Environments

Authors: Guevara, D.J., Gené-Mola, J., Greogrio, E., Auat Cheein, F. 2021. 3D Spectral Graph Wavelet Point Signatures in Pre-Processing Stage for Mobile Laser Scanning Point Cloud Registration in Unstructured Orchard Environments. IEEE Sensors Journal. https://doi.org/10.1109/JSEN.2021.3129340

The use of three-dimensional registration techniques is an important component for sensor-based localization and mapping. Several approaches have been proposed to align three-dimensional data, obtaining meaningful results in structured scenarios. However, the increased use of high-frame-rate 3D sensors has lead to more challenging application scenarios where the performance of registration techniques may degrade significantly. In order to improve the accuracy of the procedure, different works have considered a representative subset of points while preserving application-dependent features for registration. In this work, we tackle such a problem, considering the use of a general feature-extraction operator in the spectral domain as a prior step to the registration. The proposed spectral strategies use three wavelet transforms that are evaluated along with four well-known registration techniques. The methodology was experimentally validated in a dense orchard environment. The results show that the probability of failure in registration can be reduced up to 12.04% for the evaluated approaches, leading to a significant increase in the localization accuracy. Those results validate the effectiveness and efficiency of the spectral-assisted registration algorithms in an agricultural setting and motivate their usage for a wider range of applications.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

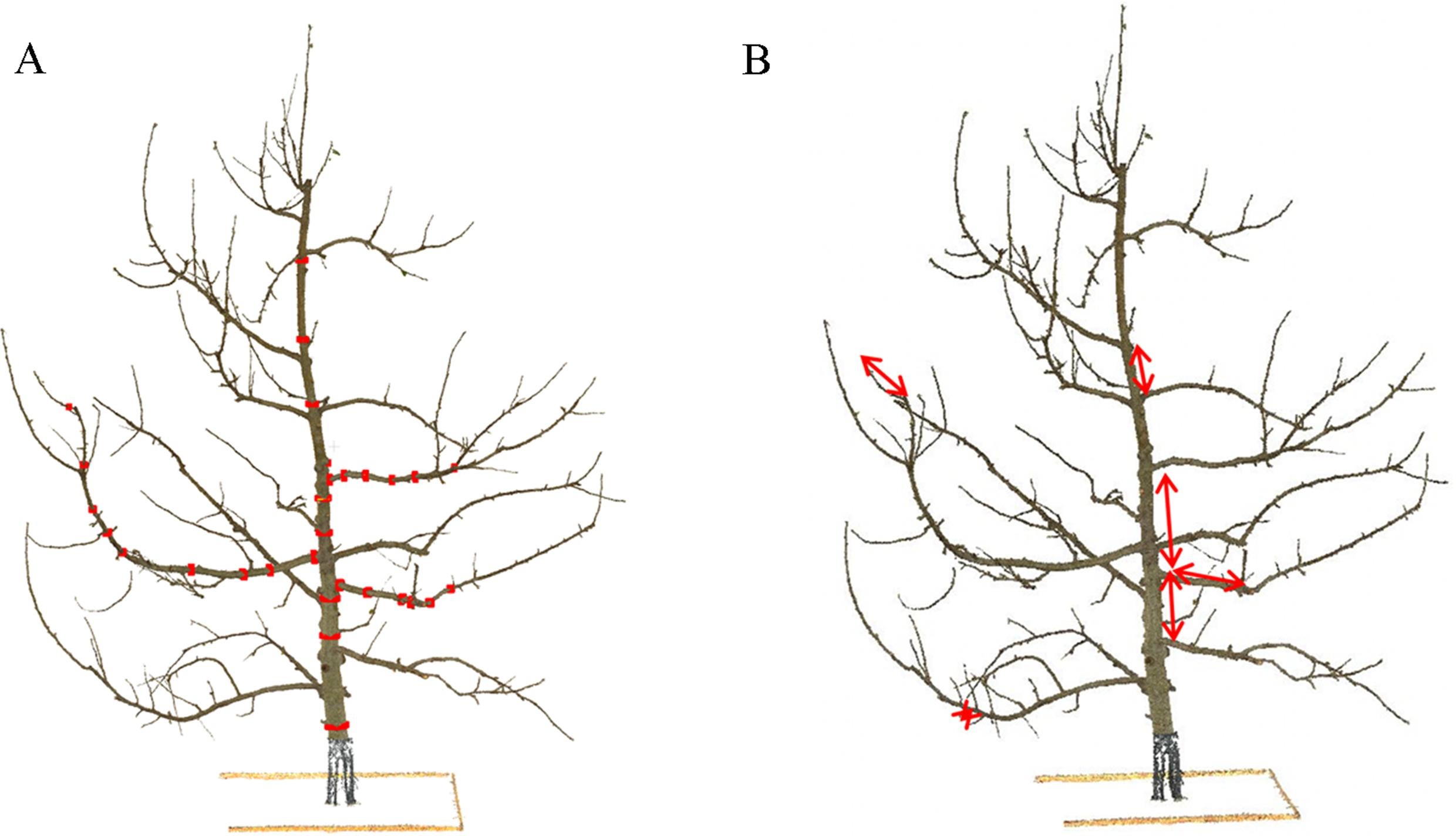

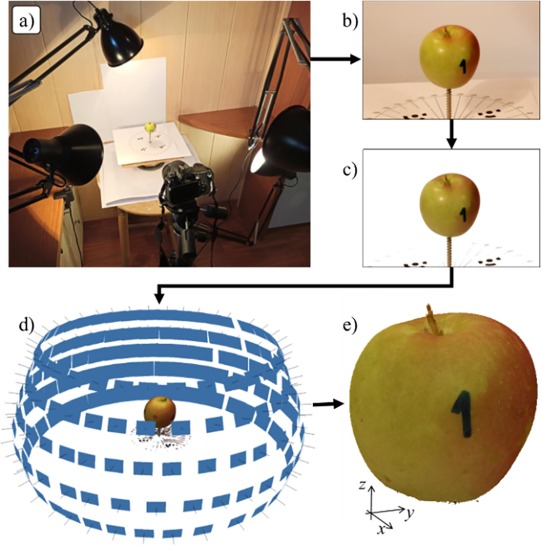

A photogrammetry-based methodology to obtain accurate digital ground-truth of leafless fruit trees

Authors: Lavaquiol, B., Sanz, R., Llorens, J. Arnó, J., Escolà, A. 2021.A photogrammetry-based methodology to obtain accurate digital ground-truth of leafless fruit trees. Computers and Electronics in Agriculture 191, 06553. https://doi.org/10.1016/j.compag.2021.106553

In recent decades, a considerable number of sensors have been developed to obtain 3D point clouds that have great potential in optimizing management in agriculture through the application of precision agriculture techniques. In order to use the data provided by these sensors, it is essential to know their measurement error. In this paper, a methodology is presented for obtaining a 3D point cloud of a central axis training system defoliated fruit tree (Malus domestica Bork.) obtained from stereophotogrammetry techniques based on structure-from-motion (SfM) and multi-view stereo-photogrammetry (MVS). The point cloud was made from a set of 288 photographs of the scene including the ground truth tree which was used to generate the digital 3D model. The resulting point cloud was validated and proven to faithfully represent reality. The bias of the resulting model is −0.15 mm and 0.05 mm, for diameters and lengths, respectively. In addition, the presented methodology allows small changes in the ground truth actual tree to be detected as a consequence of the wood dehydration process. Having an actual and a digital ground-truth is the basis for validating other sensing systems for 3D vegetation characterization which can be used to obtain data to make more informed management decisions.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

In-field apple size estimation using photogrammetry-derived 3D point clouds: Comparison of 4 different methods considering fruit occlusions

Authors: Gené-Mola, J., Sanz-Cortiella, R., Rosell-Polo, J.R., Escolà, A., Gregorio, E., 2021. In-field apple size estimation using photogrammetry-derived 3D point clouds: Comparison of 4 different methods considering fruit occlusions. Computers and Electronics in Agriculture 188, 106343. https://doi.org/10.1016/j.compag.2021.106343

Abstract: In-field fruit monitoring at different growth stages provides important information for farmers. Recent advances have focused on the detection and location of fruits, although the development of accurate fruit size estimation systems is still a challenge that requires further attention. This work proposes a novel methodology for automatic in-field apple size estimation which is based on four main steps: 1) fruit detection; 2) point cloud generation using structure-from-motion (SfM) and multi-view stereo (MVS); 3) fruit size estimation; and 4) fruit visibility estimation. Four techniques were evaluated in the fruit size estimation step. The first consisted of obtaining the fruit diameter by measuring the two most distant points of an apple detection (largest segment technique). The second and third techniques were based on fitting a sphere to apple points using least squares (LS) and M−estimator sample consensus (MSAC) algorithms, respectively. Finally, template matching (TM) was applied for fitting an apple 3D model to apple points. The best results were obtained with the LS, MSAC and TM techniques, which showed mean absolute errors of 4.5 mm, 3.7 mm and 4.2 mm, and coefficients of determination () of 0.88, 0.91 and 0.88, respectively. Besides fruit size, the proposed method also estimated the visibility percentage of apples detected. This step showed an of 0.92 with respect to the ground truth visibility. This allowed automatic identification and discrimination of the measurements of highly occluded apples. The main disadvantage of the method is the high processing time required (in this work 2760 s for 3D modelling of 6 trees), which limits its direct application in large agricultural areas. The code and the dataset have been made publicly available and a 3D visualization of results is accessible at http://www.grap.udl.cat/en/publications/apple_size_estimation_SfM.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.

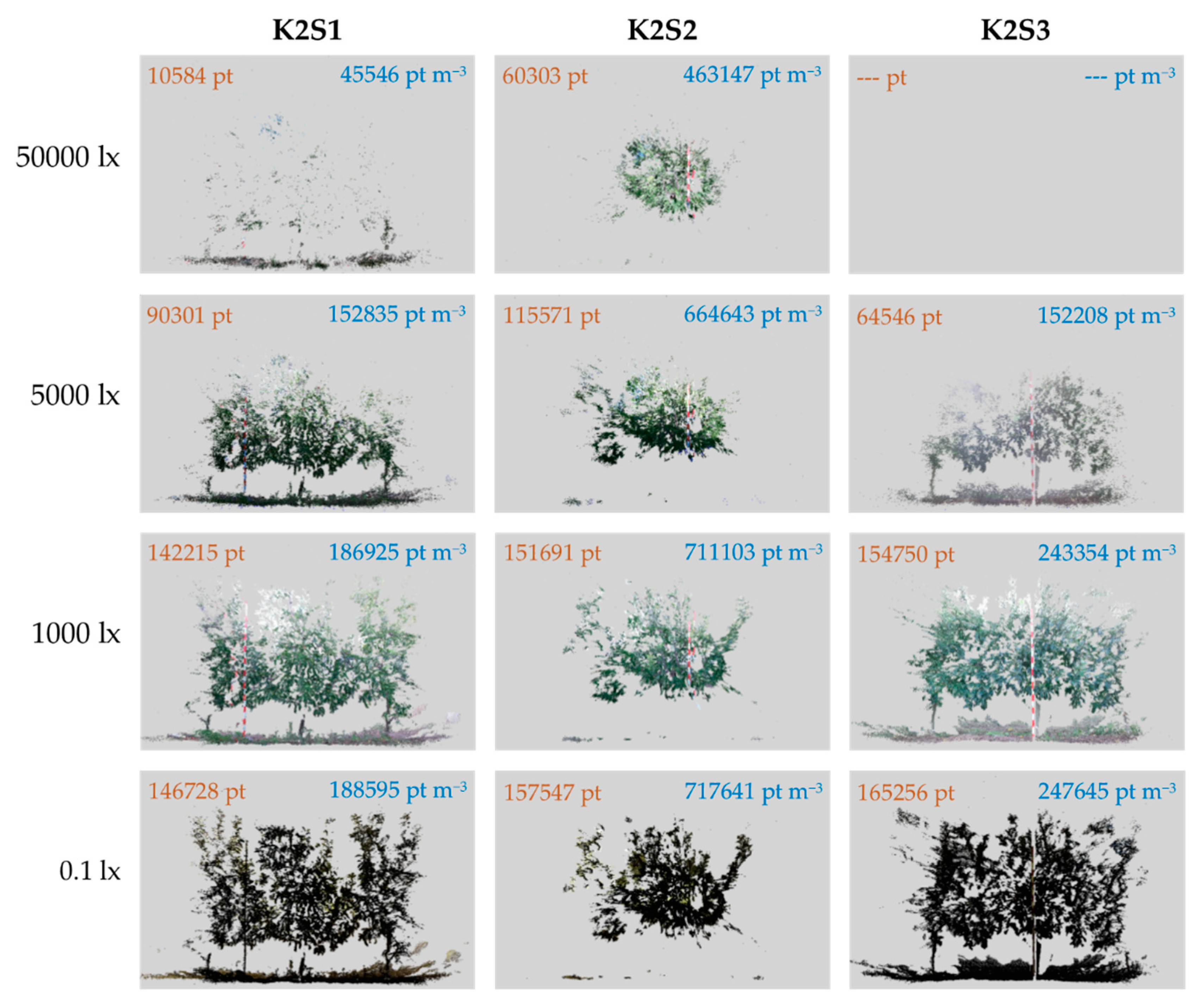

Assessing the Performance of RGB-D Sensors for 3D Fruit Crop Canopy Characterization under Different Operating and Lighting Conditions

Authors: Gené-Mola, J., Llorens, J., Rosell-Polo, J.R., Gregorio, E., Arnó, J., Solanelles, F., Martínez-Casasnovas, J.A., Escolà, A., 2020. Assessing the Performance of RGB-D Sensors for 3D Fruit Crop Canopy Characterization under Different Operating and Lighting Conditions. Sensors 20(24), 7072; https://doi.org/10.3390/s20247072

Abstract: The use of 3D sensors combined with appropriate data processing and analysis has provided tools to optimise agricultural management through the application of precision agriculture. The recent development of low-cost RGB-Depth cameras has presented an opportunity to introduce 3D sensors into the agricultural community. However, due to the sensitivity of these sensors to highly illuminated environments, it is necessary to know under which conditions RGB-D sensors are capable of operating. This work presents a methodology to evaluate the performance of RGB-D sensors under different lighting and distance conditions, considering both geometrical and spectral (colour and NIR) features. The methodology was applied to evaluate the performance of the Microsoft Kinect v2 sensor in an apple orchard. The results show that sensor resolution and precision decreased significantly under middle to high ambient illuminance (>2000 lx). However, this effect was minimised when measurements were conducted closer to the target. In contrast, illuminance levels below 50 lx affected the quality of colour data and may require the use of artificial lighting. The methodology was useful for characterizing sensor performance throughout the full range of ambient conditions in commercial orchards. Although Kinect v2 was originally developed for indoor conditions, it performed well under a range of outdoor conditions.

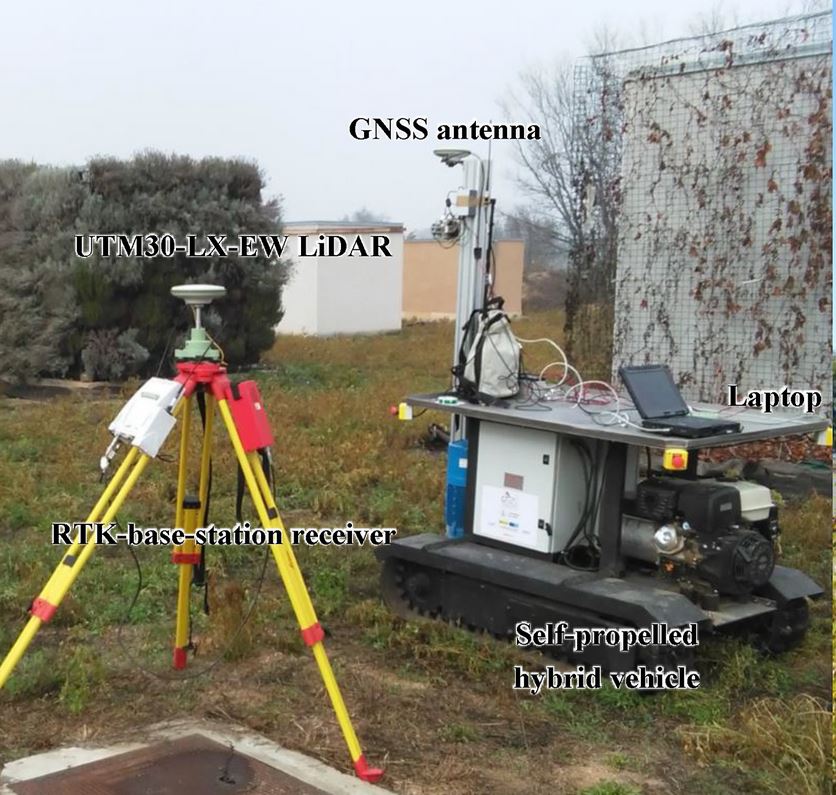

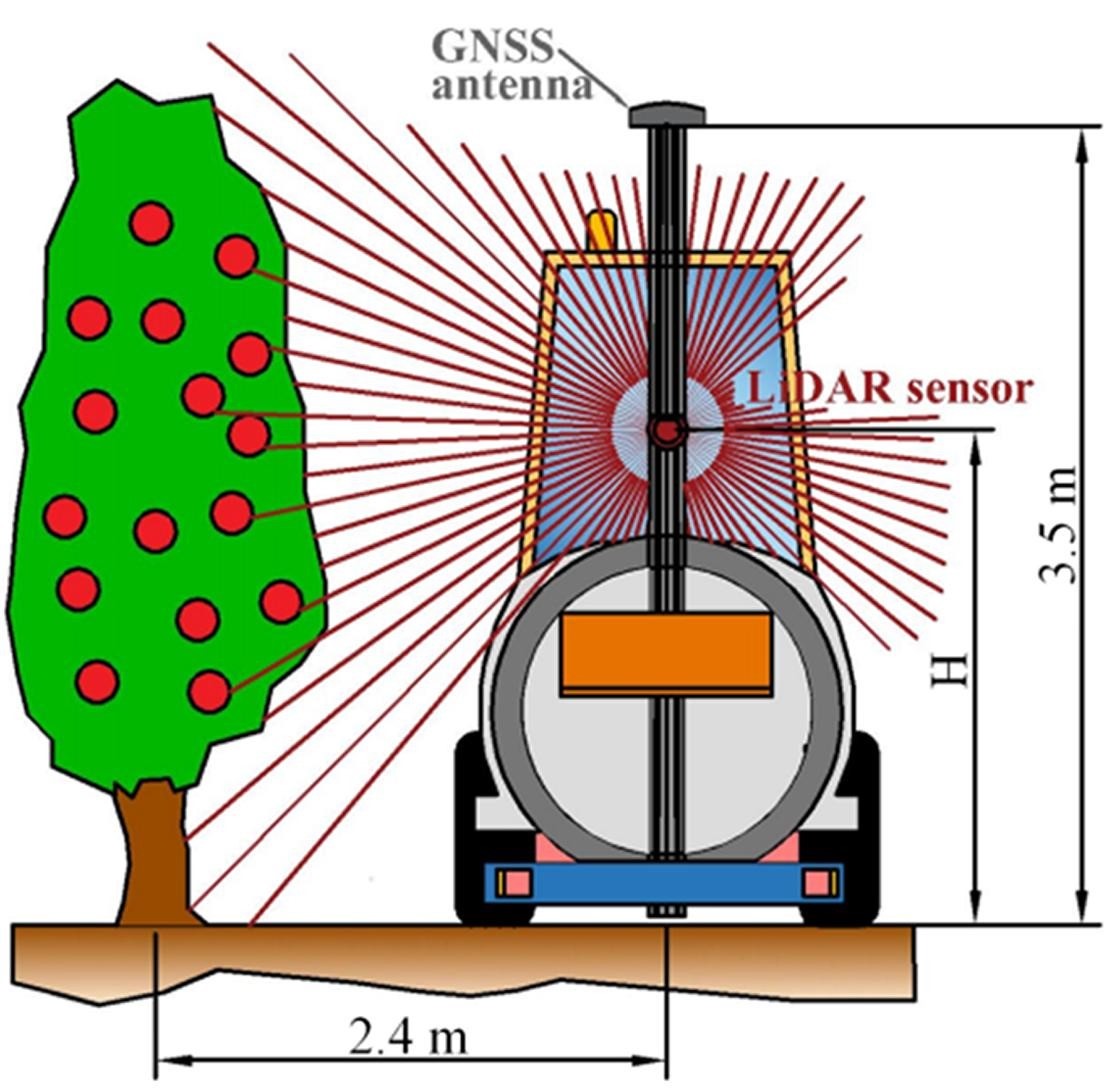

Analyzing and overcoming the effects of GNSS error on LiDAR based orchard parameters estimation

Authors: Guevara, J., Auat, F., Guevara, J., Gené-Mola, J., Rosell-Polo, J.R., Gregorio, E., 2020. Analyzing and overcoming the effects of GNSS error on LiDAR based orchard parameters estimation. Computers and Electronics in Agriculture 170, 10525529. https://doi.org/10.1016/j.compag.2020.105255

Abstract: Currently, 3D point clouds are obtained via LiDAR (Light Detection and Ranging) sensors to compute vegetation parameters to enhance agricultural operations. However, such a point cloud is intrinsically dependent on the GNSS (global navigation satellite system) antenna used to have absolute positioning of the sensor within the grove. Therefore, the error associated with the GNSS receiver is propagated to the LiDAR readings and, thus, to the crown or orchard parameters. In this work, we first describe the error propagation of GNSS over the laser scan measurements. Second, we present our proposal to overcome this effect based only on the LiDAR readings. Such a proposal uses a scan matching approach to reduce the error associated with the GNSS receiver. To accomplish such purpose, we fuse the information from the scan matching estimations with the GNSS measurements. In the experiments, we statistically analyze the dependence of the grove parameters extracted from the 3D point cloud -specifically crown surface area, crown volume, and crown porosity- to the localization error. We carried out 150 trials with positioning errors ranging from 0.01 meters (ground truth) to 2 meters. When using only GNSS as a localization system, the results showed that errors associated with the estimation of vegetation parameters increased more than 100 when positioning error was equal or bigger than 1 meter. On the other hand, when our proposal was used as a localization system, the results showed that for the same case of 1 meter, the estimation of orchard parameters improved in 20 overall. However, in lower positioning errors of the GNSS, the estimation of orchard parameters were improved up to 50% overall. These results suggest that our work could lead to better decisions in agricultural operations, which are based on foliar parameter measurements, without the use of external hardware.

Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry

Authors: Gené-Mola, J.; Sanz-Cortiella, R., Rosell-Polo, J.R., Morros, J.R, Ruiz-Hidalgo, J., Vilaplana, V., Gregorio, E., 2020. Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Computers and Electronics in Agriculture 169, 105165. https://doi.org/10.1016/j.compag.2019.105165

Abstract: The development of remote fruit detection systems able to identify and 3D locate fruits provides opportunities to improve the efficiency of agriculture management. Most of the current fruit detection systems are based on 2D image analysis. Although the use of 3D sensors is emerging, precise 3D fruit location is still a pending issue. This work presents a new methodology for fruit detection and 3D location consisting of: (1) 2D fruit detection and segmentation using Mask R-CNN instance segmentation neural network; (2) 3D point cloud generation of detected apples using structure-from-motion (SfM) photogrammetry; (3) projection of 2D image detections onto 3D space; (4) false positives removal using a trained support vector machine. This methodology was tested on 11 Fuji apple trees containing a total of 1455 apples. Results showed that, by combining instance segmentation with SfM the system performance increased from an F1-score of 0.816 (2D fruit detection) to 0.881 (3D fruit detection and location) with respect to the total amount of fruits. The main advantages of this methodology are the reduced number of false positives and the higher detection rate, while the main disadvantage is the high processing time required for SfM, which makes it presently unsuitable for real-time work. From these results, it can be concluded that the combination of instance segmentation and SfM provides high performance fruit detection with high 3D data precision. The dataset has been made publicly available and an interactive visualization of fruit detection results is accessible at http://www.grap.udl.cat/documents/photogrammetry_fruit_detection.html.

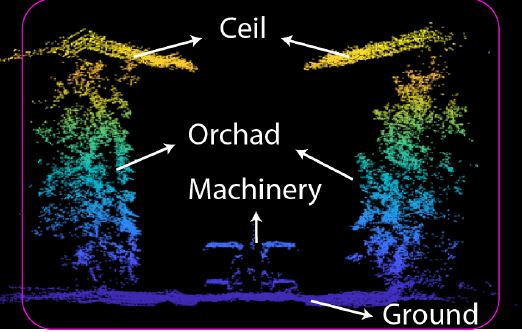

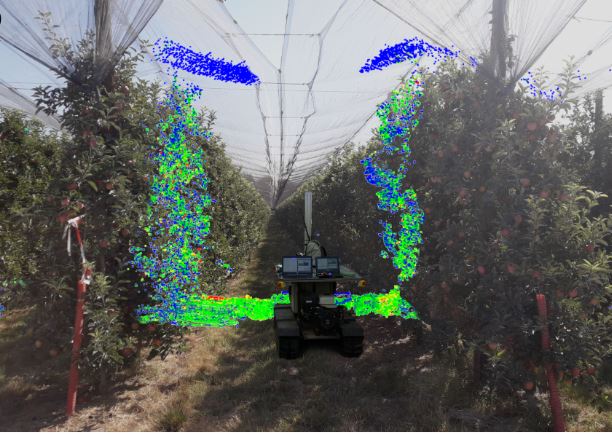

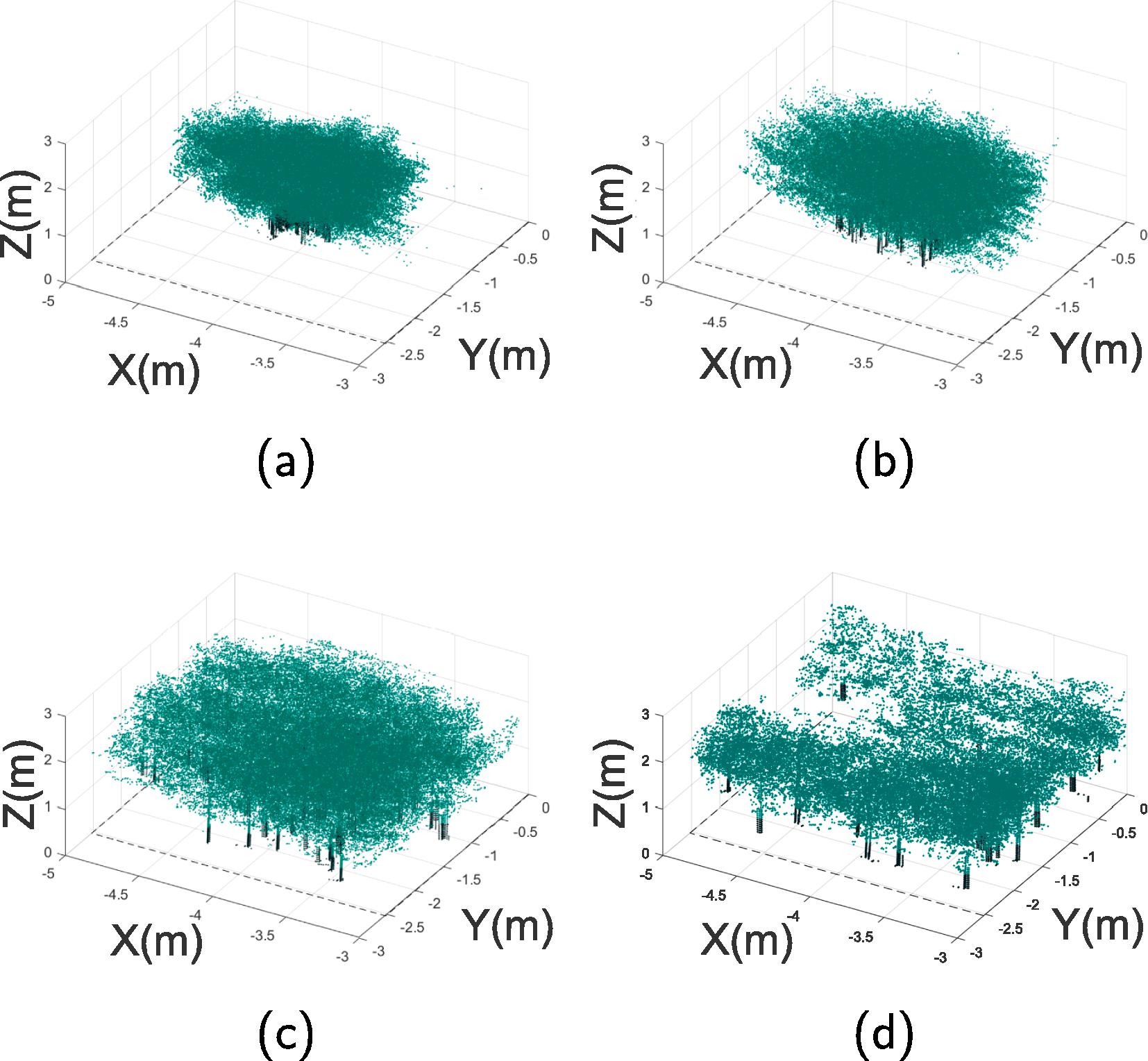

Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow

Authors: Gené-Mola, J.; Gregorio, E., Auat, F.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R., 2020. Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow. Computers and Electronics in Agriculture 168, 105121. https://doi.org/10.1016/j.compag.2019.105121

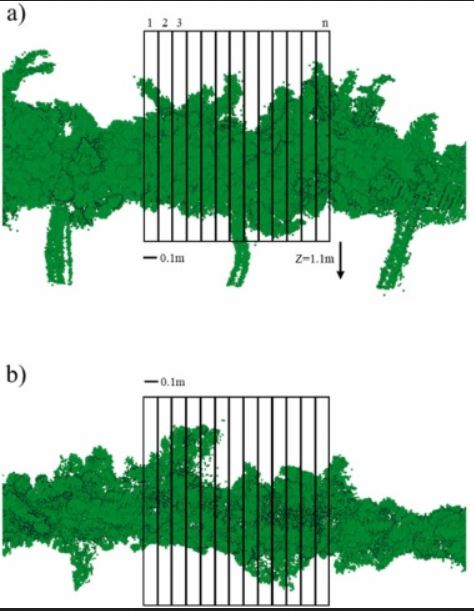

Abstract: Yield monitoring and geometric characterization of crops provide information about orchard variability and vigor, enabling the farmer to make faster and better decisions in tasks such as irrigation, fertilization, pruning, among others. When using LiDAR technology for fruit detection, fruit occlusions are likely to occur leading to an underestimation of the yield. This work is focused on reducing the fruit occlusions for LiDAR-based approaches, tackling the problem from two different approaches: applying forced air flow by means of an air-assisted sprayer, and using multi-view sensing. These approaches are evaluated in fruit detection, yield prediction and geometric crop characterization. Experimental tests were carried out in a commercial Fuji apple (Malus domestica Borkh. cv. Fuji) orchard. The system was able to detect and localize more than 80% of the visible fruits, predict the yield with a root mean square error lower than 6% and characterize canopy height, width, cross-section area and leaf area. The forced air flow and multi-view approaches helped to reduce the number of fruit occlusions, locating 6.7% and 6.5% more fruits, respectively. Therefore, the proposed system can potentially monitor the yield and characterize the geometry in apple trees. Additionally, combining trials with and without forced air flow and multi-view sensing presented significant advantages for fruit detection as they helped to reduce the number of fruit occlusions.

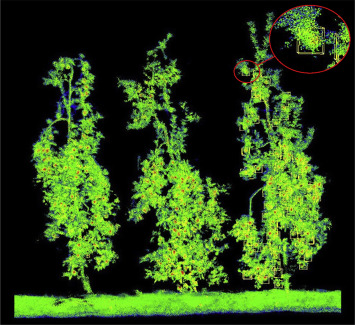

Fruit detection in an apple orchard using a mobile terrestrial laser scanner

3D point cloud models obtained for trees. https://ars.els-cdn.com/content/image/1-s2.0-S1537511019308128-gr2_lrg.jpg

3D point cloud models obtained for trees. https://ars.els-cdn.com/content/image/1-s2.0-S1537511019308128-gr2_lrg.jpg

Authors: Gené-Mola, J.; Gregorio, E., Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Rosell-Polo, J.R., 2019. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosystems Engineering 187, 171-184. https://doi.org/10.1016/j.biosystemseng.2019.08.017

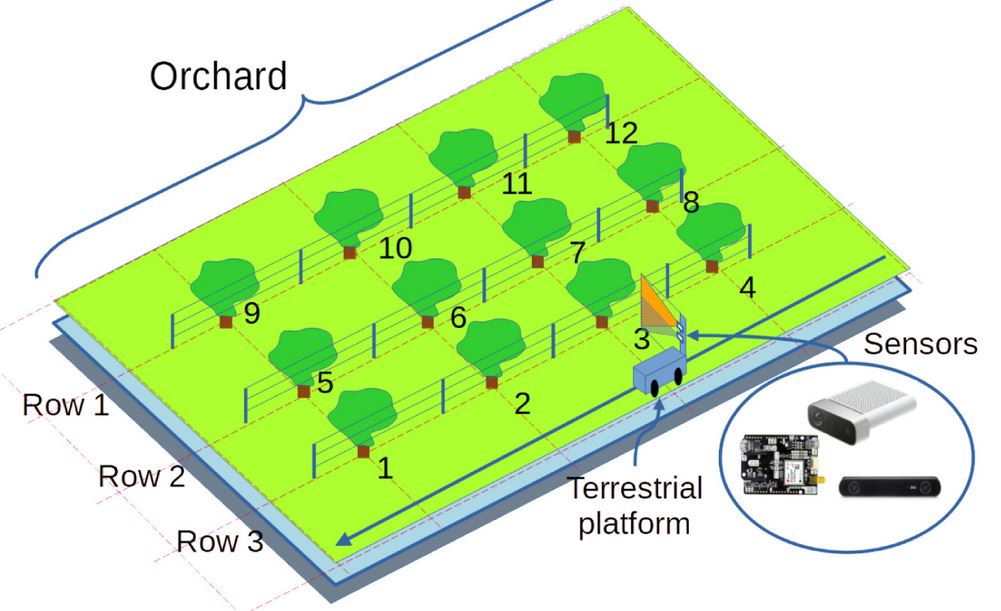

Abstract: The development of reliable fruit detection and localization systems provides an opportunity to improve the crop value and management by limiting fruit spoilage and optimised harvesting practices. Most proposed systems for fruit detection are based on RGB cameras and thus are affected by intrinsic constraints, such as variable lighting conditions. This work presents a new technique that uses a mobile terrestrial laser scanner (MTLS) to detect and localise Fuji apples. An experimental test focused on Fuji apple trees (Malus domestica Borkh. cv. Fuji) was carried out. A 3D point cloud of the scene was generated using an MTLS composed of a Velodyne VLP-16 LiDAR sensor synchronised with an RTK-GNSS satellite navigation receiver. A reflectance analysis of tree elements was performed, obtaining mean apparent reflectance values of 28.9%, 29.1%, and 44.3% for leaves, branches and trunks, and apples, respectively. These results suggest that the apparent reflectance parameter (at 905 nm wavelength) can be useful to detect apples. For that purpose, a four-step fruit detection algorithm was developed. By applying this algorithm, a localization success of 87.5%, an identification success of 82.4%, and an F1-score of 0.858 were obtained in relation to the total amount of fruits. These detection rates are similar to those obtained by RGB-based systems, but with the additional advantages of providing direct 3D fruit location information, which is not affected by sunlight variations. From the experimental results, it can be concluded that LiDAR-based technology and, particularly, its reflectance information, has potential for remote apple detection and 3D location.

Grant RTI2018-094222-B-I00 (PAgFRUIT project) by MCIN/AEI/10.13039/501100011033 and by “ERDF, a way of making Europe”, by the European Union.